Artificial Intelligence (AI) has revolutionized the way machines understand and process human language. At the core of many modern AI systems is the concept of embeddings. These dense vector representations translate textual or numerical data into a format that machine learning models can understand. Whether you’re building a recommendation engine, a chatbot, or a semantic search tool, choosing the right embedding model and dimensions can have a significant impact on performance.

What Are AI Embeddings?

In simplest terms, embeddings are numerical representations of objects—typically words, sentences, images, or other data types—mapped into a continuous vector space. In this space, similar objects are positioned closer together, enabling machines to perform computations on semantics. For example, in a high-quality word embedding space, words like “king” and “queen” will have similar vectors, reflecting their similar meanings.

Embeddings allow machines to capture complex features and relationships within data, which are often lost in traditional sparse representations like one-hot encoding. The effectiveness of these vectors depends significantly on two factors:

- The model used to generate the embedding

- The dimensionality (length) of the embedding vector

Types of Embedding Models

Dozens of embedding models are available today, each optimized for different use cases. Here are some commonly used categories and examples:

1. Static Word Embeddings

These are pre-trained embeddings where each word has a fixed vector, regardless of context.

- Word2Vec: Trains words to map to a vector space based on surrounding words. Results in embeddings where vector operations capture linguistic relationships.

- GloVe: Developed by Stanford, uses global word co-occurrence statistics to learn embeddings. Effective in preserving global semantics.

2. Contextual Word Embeddings

Introduced by models like BERT, contextual embeddings generate representations based on the word’s usage within a sentence.

- BERT (Bidirectional Encoder Representations from Transformers): Captures the left and right context of words, resulting in more nuanced embeddings.

- RoBERTa: A robustly optimized BERT variant with improvements in training method and model architecture.

3. Sentence and Document Embeddings

These embeddings represent longer pieces of text, aiming to capture overall semantic meaning.

- Sentence-BERT: Modified BERT to generate sentence-level embeddings efficiently and with greater semantic accuracy.

- Universal Sentence Encoder: Google’s model trained for sentence-level similarities across multiple tasks.

Choosing the Right Model

Selecting the appropriate embedding model is critical and should be based on:

- Task Requirements: If you’re building a translation engine, contextual embeddings like BERT work better. For keyword clustering, static models may suffice.

- Speed vs. Accuracy: Transformer-based models yield highly accurate embeddings but are computationally intensive. Lightweight models like FastText or Word2Vec are faster but less sophisticated.

- Pre-training vs. Fine-tuning: Some embeddings work well out-of-the-box, while others benefit significantly from being fine-tuned on domain-specific data.

The Importance of Dimensionality

Embedding dimensionality refers to the number of elements in the vector used to represent data. Striking the right balance between dimensionality and performance is essential:

- Low-dimensional embeddings (50–200 dimensions): Suitable for information retrieval, basic clustering, and visualization. They consume less memory and are computationally efficient but may miss subtle semantic information.

- High-dimensional embeddings (300–1024+ dimensions): Capture more nuance and complexity, crucial for tasks like paraphrase detection or sentiment analysis. However, they require more computation and are prone to overfitting if the downstream model is small or the training dataset is limited.

More dimensions aren’t always better. Including too many can lead to the “curse of dimensionality,” where distances between vectors become less meaningful, and model performance deteriorates.

Comparing Embedding Dimensions

When evaluating which vector size to use, consider the trade-offs:

| Dimension Size | Pros | Cons |

|---|---|---|

| 50–100 | Fast to compute, low space cost | Limited semantic detail |

| 200–300 | Good balance of detail and efficiency | May still miss contextual nuances |

| 512–1024+ | Excellent semantic depth | Expensive in terms of memory and processing |

Real-World Applications of Embeddings

Embeddings power some of the most impactful AI technologies we use today. Here are a few notable examples:

- Semantic Search: Embeddings allow search engines to return results based on semantic relevance instead of keyword matching.

- Chatbots & Virtual Assistants: Embeds user queries into a vector space to find appropriate responses or actions.

- Recommendation Systems: Products, users, or interactions are embedded and compared to surface relevant recommendations.

- Fraud Detection: Transactions or users can be embedded to detect anomalies based on distance and clustering behaviors.

Evaluating Embedding Quality

Evaluating the quality of embeddings is an essential step. Poorly chosen embeddings can degrade performance across AI pipelines. Consider these strategies:

- Intrinsic Evaluations: Tasks like word similarity tests (e.g., solving analogies like “king is to queen as man is to ?”) can give quick snapshots of embedding quality.

- Extrinsic Evaluations: Integrate embeddings into actual downstream tasks (like text classification or question answering) and measure performance metrics such as accuracy or F1-score.

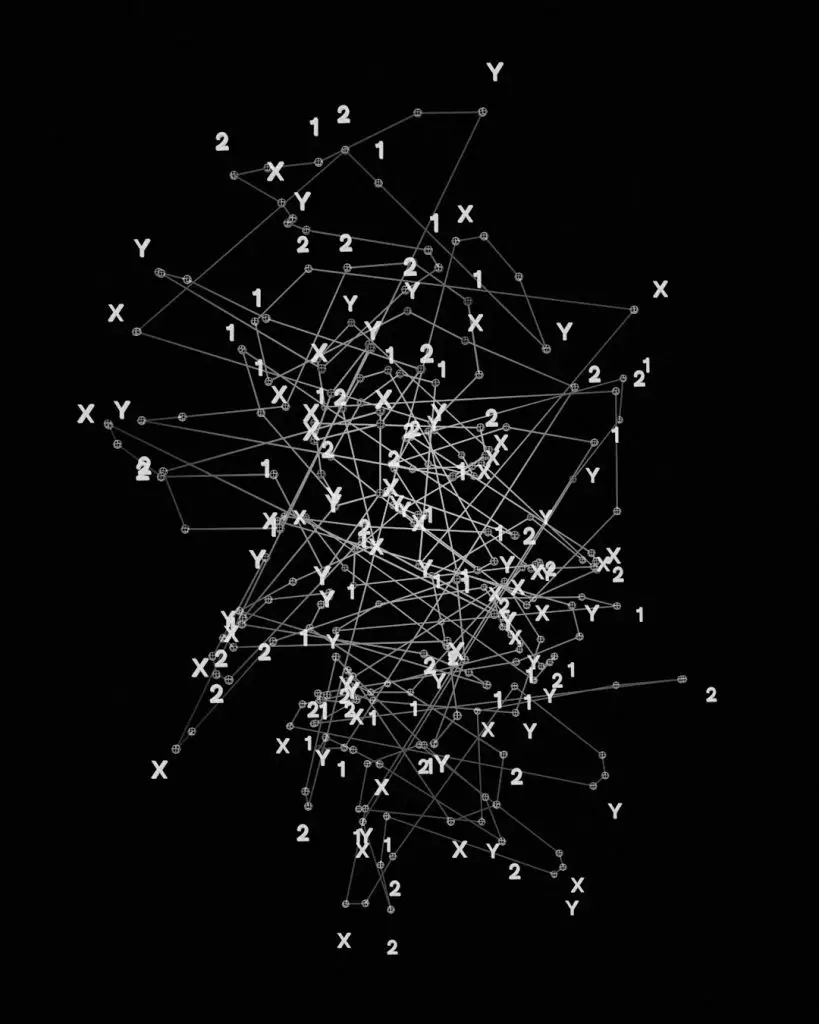

- Visualization: Use tools like t-SNE or PCA to visually inspect the embedding space. Clustering and spatial relationships reveal how well the model captures semantic similarity.

Best Practices for Using AI Embeddings

To get the most out of embeddings in your applications, follow these key principles:

- Know your domain: A general-purpose embedding trained on Wikipedia might not work well in a legal or medical context.

- Avoid overfitting: Especially when using large-dimensional embeddings on small datasets. Fine-tune with caution.

- Pre-process consistently: Tokenization, casing, and punctuation handling must be aligned between the embedding model and incoming data.

- Monitor performance: Regularly test embeddings as your application or data evolves.

Conclusion

Embeddings are foundational to modern AI systems. Choosing the right model and dimensionality is not just a technical decision—it impacts the effectiveness, efficiency, and scalability of your entire AI solution. By understanding the nature of embedding models and the significance of vector dimensions, practitioners can make informed, strategic choices tailored to their specific applications.

With ongoing advances in large language models and efficient vector representation techniques, embeddings will continue to evolve. Staying informed and adaptable is key as we move toward more context-aware, scalable, and intelligent AI systems.